Keeping academic integrity top of mind

“Undergraduate and graduate students may use GenAI tools as learning aids, for example, to summarize information or test their understanding of a topic. These tools should be used in a manner similar to consulting library books, online sources, peers, or a tutor. Such uses are generally acceptable even if an instructor has stated that AI tools are not otherwise permitted in the course. These uses typically do not need to be cited or disclosed.”

(Office of the Vice-Provost, Innovations in Undergraduate Education: FAQs on GenAI in the Classroom, 2024)

However, it is recommended that if you have any questions or doubts about whether you should use GenAI in your assignments or other academic work, beyond use as described above, always contact your course instructor or graduate supervisor for clarification.

Your responsibility: accuracy and fact-checking

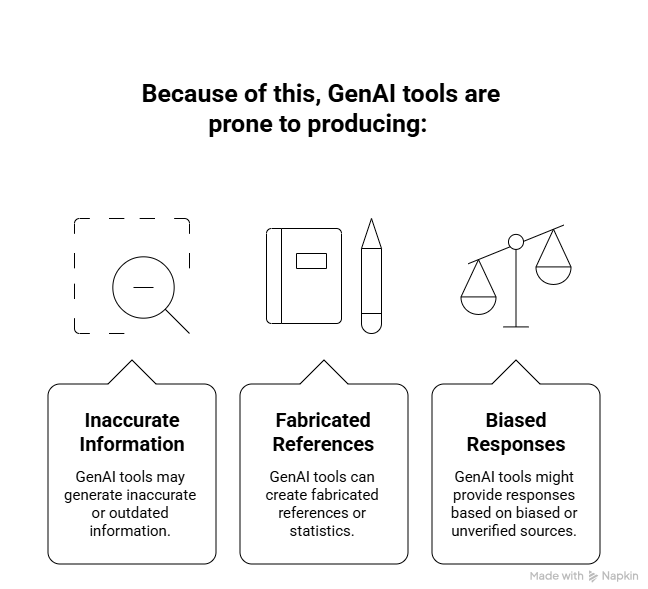

nAI tools, such as ChatGPT or Copilot, are designed to generate text by identifying and reproducing patterns in large datasets, they do not possess a factual understanding of the world, nor do they have access to real-time information (unless explicitly connected to live databases).

These issues are often referred to as hallucinations — instances where the AI generates information that is either entirely false, partially fabricated, or misleadingly framed. In some cases, misinformation (false information shared without harmful intent) or disinformation (false information with the intent to deceive) can also be embedded into responses based on the nature of the data the AI was trained on. For additional information about Gen AI hallucinations and dis/misinformation see the [Understand Content] module.

As a university student, you are expected to evaluate and verify any information used in your academic work — whether it originates from a scholarly database, a textbook, or a Gen AI tool. You should also indicate the source of content, including text, code or images generated by AI-enabled tools per evolving scholarly norms for citation and attribution. This will ensure clarity regarding the source for future reference.

Your responsibility includes:

- Critically assessing the accuracy and credibility of AI-generated responses

- Cross-referencing claims against reliable and peer-reviewed sources

- Understanding the limitations of AI tools as non-expert systems

- Attribution or statement of use of AI-enabled tools for content generation

Relying solely on Gen AI content without fact-checking undermines academic integrity and can contribute to the spread of misinformation.

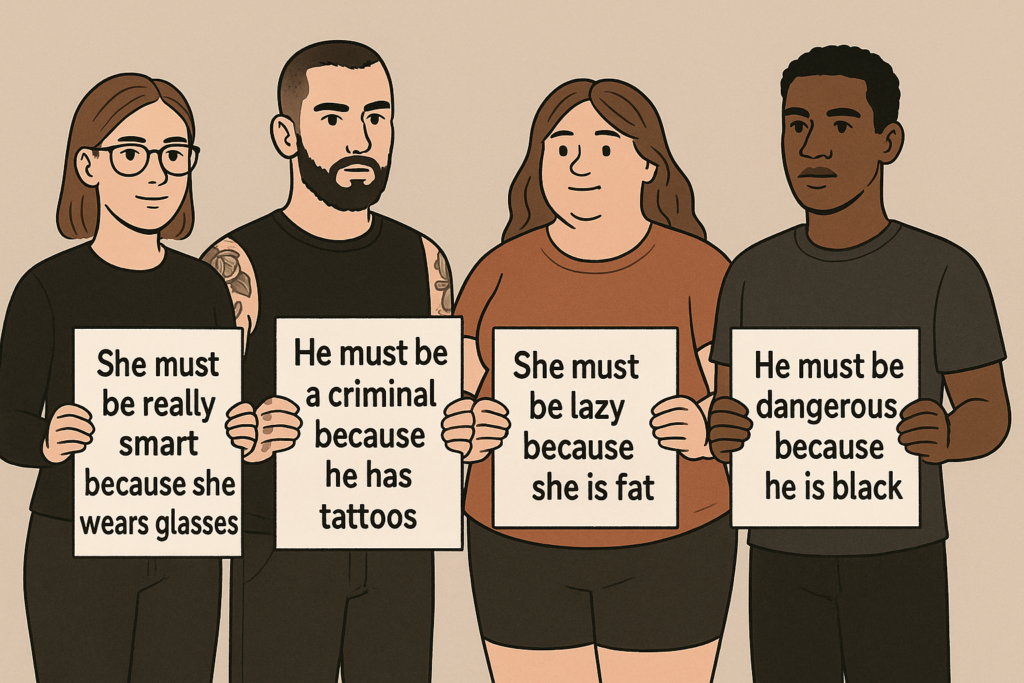

Beware of bias

Generative AI tools are trained on large-scale datasets that reflect the content and biases of the internet and broader cultural production. As a result, AI outputs may reinforce:

These problematic patterns are not intentional, but they are deeply embedded in the data. Understanding this helps you recognize the importance of taking responsibility to ensure issues are not replicated.

Practice Activity: Explore and uncover model bias

To explore how LLMs may reflect societal or cultural biases embedded in their training data you can look for patterns in how it describes gender, race, class, or other identity markers. Follow these steps:

1. Create Sentence Prompts:

- Think of a few sentence or image generation prompts that describe people in professions, behaviours, or situations

- The CEO walked into the room and…

- The nurse walked into the room and…

2. Input Sentences into Chatbots:

- Use a chatbot like Microsoft Copilot or ChatGPT to respond to the prompt.

- Complete this sentence : The CEO walked into the room and…

3. Repeat and Reflect:

- Repeat this process multiple times with the different professions to observe the results.

- What assumptions does the model seem to make?

- Do different professions lead to different types of completions?

- What kinds of names, genders, or descriptors are inferred by the model, and are they stereotypical?